The Bit

The bit is the most basic unit of information in computing.

The bit can only be one of two possible values, 1 or 0.

The bit denotes a single instance of a binary signal.

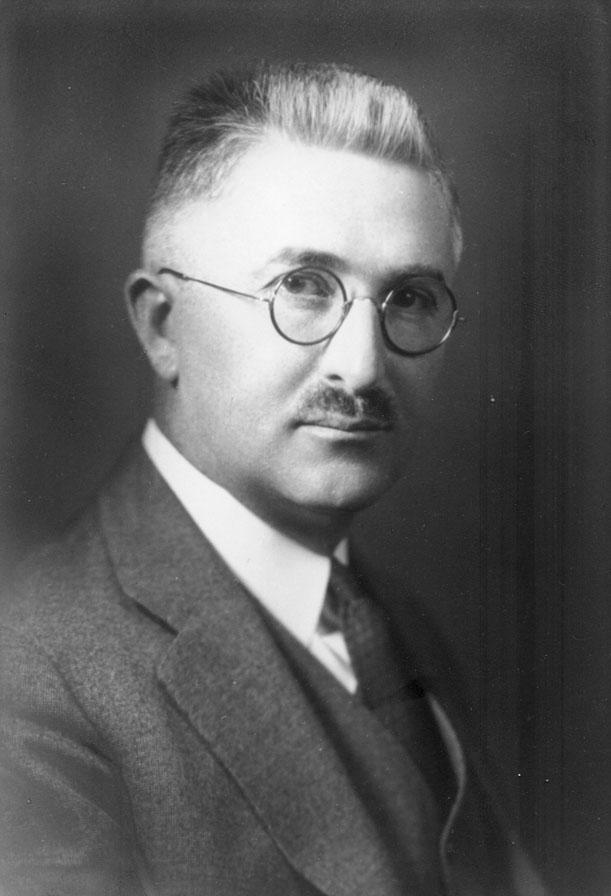

Ralph Vinton Lyon Hartley, American electronics researcher who suggested the use of logarithmic measures of information back in 1928.